I just finished my own disaster recovery operation. There are still a few loose ends but the major work is done. I’m fully operational.

Saturday, Dec. 20, 2014 was a bad day. It could have been worse. Much worse. It could have shut my company down forever. But it didn’t – I recovered, but it wasn’t easy and I made some mistakes. Here’s a summary of what I did right preparing for it, what I did wrong, how I recovered, and lessons learned. Hopefully others can learn from my experience.

My business critical systems include a file/print server, an email server, and now my web server. I also operate an OpenVPN server that lets me connect back here when I’m on the road, but I don’t need it up and running every day.

My file/print server has everything inside it. My Quickbooks data, copies of every proposal I’ve done, how-to documentation, my “Bullseye Breach” book I’ve been working on for the past year, marketing stuff, copies of customer firewall scripts, thousands of pictures and videos, and the list goes on. My email server is my conduit to the world. By now, there are more than 20,000 email messages tucked away in various folders and hundreds of customer notes. When customers call with questions and I look like a genius with an immediate answer, those notes are my secret sauce. Without those two servers, I’m not able to operate. There’s too much information about too much technology with too many customers to keep it all in my head.

And then my web server. Infrasupport has had different web sites over the years, but none were worth much and I never put significant effort into any of them. I finally got serious in early 2013 when I committed to Red Hat I would have a decent website up and running within 3 weeks. I wasn’t sure how I would get that done, and it took me more like 2 months to learn enough about WordPress to put something together, but I finally got a nice website up and running. And I’ve gradually added content, including this blog post right now. The idea was – and still is – the website would become a repository of how-to information and business experience potential customers could use as a tool. It builds credibility and hopefully a few will call and use me for some projects. I’ve sent links to “How to spot a phishy email” and other articles to dozens of potential customers by now.

Somewhere over the past 22 months, my website also became a business critical system. But I didn’t realize it until after my disaster. That cost me significant sleep. But I’m getting ahead of myself.

All those virtual machines live inside a RHEV (Red Hat Enterprise Virtualization) environment. One physical system holds all the storage for all the virtual machines, and then a couple of other low cost host systems provide CPU power. This is not ideal. The proper way to do this is put the storage in a SAN or something with redundancy. But, like all customers, I operate on a limited budget, so I took the risk of putting all my eggs in this basket. I made the choice and given the cost constraints I need to live with, I would make the same choice again.

I have a large removable hard drive inside a PC next to this environment and I use Windows Server Backup every night to back up my servers to directories on this hard drive. And I have a script that rotates the saveset names and keeps 5 backup copies of each server.

Ideally, I should also find a way to keep backups offsite in case my house burns down. I’m still working on that. Budget constraints again. For now – hopefully I’ll be home if a fire breaks out and I can grab that PC with all my backups and bring it outside. And hopefully, no Minnesota tornado or other natural disaster will destroy my house. I chose to live with that risk, but I’m open to revisiting that choice if an opportunity presents itself.

So what happened?

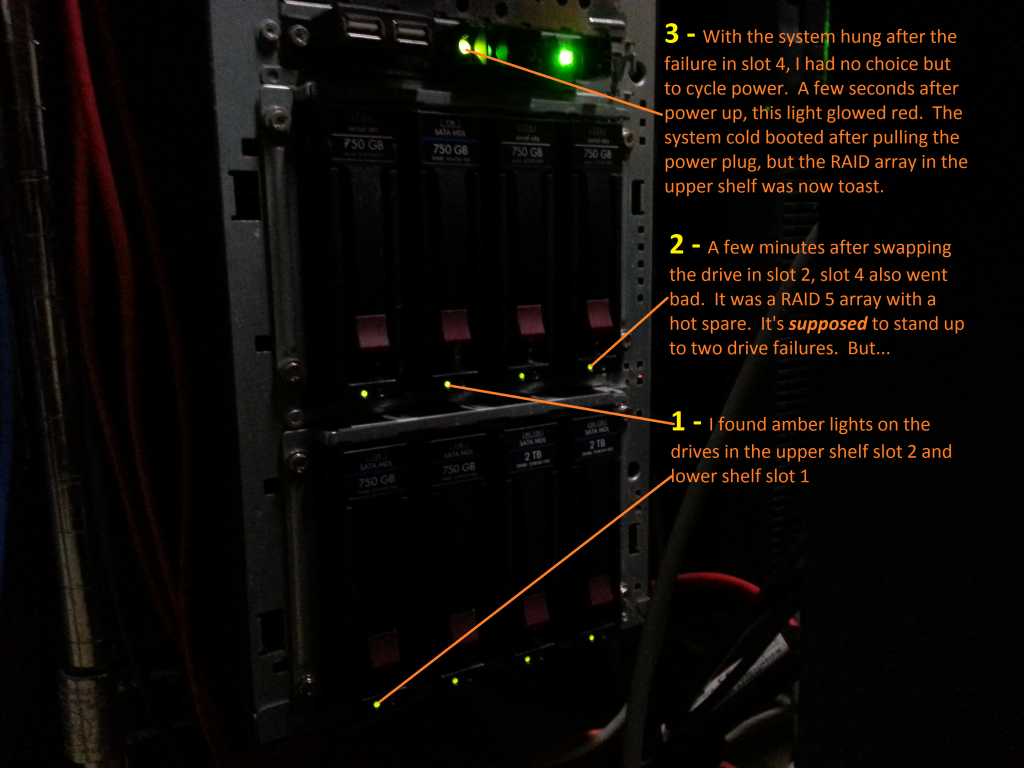

The picture above summarizes it. **But see the update added later at the end of this post.** I walked downstairs around 5:30 PM on Saturday and was shocked to find not one, but two of my 750 GB drives showing amber lights, meaning something was wrong with them. But I could still survive the failures. The RAID 5 array in the upper shelf with my virtual machine data had a hot spare, so it should have been able to stand up to two failing drives. At this point only one upper shelf drive was offline, so it should have been rebuilding itself onto the hot spare. The 750 GB drives in the bottom shelf with the system boot drive were mirrored, so that array should (and did) survive one drive failure.

I needed to do some hot-swapping before anything else went wrong. I have some spare 750 GB drives, so I hot-swapped the failed drive in the upper shelf. My plan was to let that RAID set rebuild, then swap the lower drive for the mirror set to rebuild. And I would run diagnostics on the replaced drives to see what was wrong with them.

I bought the two 2 TB drives in slots 3 and 4 of the lower shelf a few months ago and set them up as a mirror set, but they were not in production yet.

Another note. This turns out to be significant. It seems my HP 750 GB hotswap drives have a firmware issue. Firmware level HPG1 has a known issue where the drives declare themselves offline when they’re really fine. The cure is to update the firmware to the latest version, HPG6. I stumbled onto that problem a couple months ago when I brought in some additional 750 GB drives and they kept declaring themselves offline. I updated all my additional drives, but did not update the drives already in place in the upper shelf – they had been running for 4+ years without a problem. Don’t fix it if it ain’t broke. This decision would bite me in a few minutes.

After swapping the drive, I hopped in the car to pick up some takeout food for the family. I wouldn’t see my own dinner until around midnight.

I came back about 1/2 hour later and was shocked to find the drive in the upper shelf, slot 4 also showing an amber light. And my storage server was hung. So were all the virtual machines that depended on it. Poof – just like that, I was offline. Everything was dead.

In my fictional “Bullseye Breach” book, one of the characters gets physically sick when he realizes the consequences of a server issue in his company. That’s how I felt. My stomach churned, my hands started shaking and I felt dizzy. Everything was dead. No choice but to power cycle the system. After cycling the power, that main system status light glowed red, meaning some kind of overall systemic failure.

That’s how fast an entire IT operation can change from smoothly running to a major mess. And that’s why good IT people are freaks about redundancy – because nobody likes to experience what I went through Saturday night.

Faced with another ugly choice, I pulled the power plug on that server and cold booted it. That cleared the red light and it booted. The drive in upper shelf slot 4 declared itself good again – its problem was that old HPG1 firmware. So now I had a bootable storage server, but the storage I cared about with all my virtual machine images was a worthless pile of scrambled electronic bits.

I tried every trick in the book to recover that upper shelf array. Nothing worked, and deep down inside, I already knew it was toast. Two drives failed. The controller that was supposed to handle it also failed. **This sentence turns out to be wrong. See the update added later at the end.** And one of the two drives in the bottom mirror set was also dead.

Time to face facts.

Recovery

I hot swapped a replacement drive for the failed drive in the bottom shelf. The failed drive already had the new firmware, so I ran a bunch of diagnostics against it on a different system. The diagnostics suggested this drive really was bad. Diagnostics also suggested the original drive in upper slot 2 was bad. That explained the drive failures. Why the controller forced me to pull the power plug after the multiple failures is anyone’s guess.

I put my 2 TB mirror set into production and built a brand new virtualization environment on it. The backups for my file/print and email server virtual machines were good and I had both of those up and running by Sunday afternoon.

The website…

Well, that was a different story. I never backed it up. Not once. Never bothered with it. What a dork!

I had to rebuild the website from scratch. To make matters worse, the WordPress theme I used is no longer easily available and no longer supported. And it had some custom CSS commands to give it the exact look I wanted. And it was all gone.

Fortunately for me, Brewster Kahle’s mom apparently recorded every news program she could get in front of from sometime in the 1970s until her death. That inspired Brewster Kahle to build a website named web.archive.org. I’ve never met Brewster, but I am deeply indebted to him. His archive had copies of nearly all my web pages and pointers to some supporting pictures and videos.

Is my website a critical business system? After my Saturday disaster, an email came in Monday morning from a friend at Red Hat, with subject, “website down.” If my friend at Red Hat was looking for it, so were others. So, yeah, it’s critical.

I spent the next 3 days and nights rebuilding and by Christmas eve, Dec. 24, most of the content was back online. Google’s caches and my memory helped rebuild the rest and by 6 AM Christmas morning, the website was fully functional again. As of this writing, I am missing only one piece of content. It was a screen shot supporting a blog post I wrote about the mess at healthcare.gov back in Oct. 2013. That’s it. That’s the only missing content. One screen shot from an old, forgotten blog post. And the new website has updated plugins for SEO and other functions, so it’s better than the old website.

My headache will no doubt go away soon and my hands don’t shake anymore. I slept most of last night. It felt good.

Lessons Learned

Backups are important. Duh! I don’t have automated website backups yet, but the pieces are in place and I’ll whip up some scripts soon. In the meantime, I’ll backup the database and content by hand as soon as I post this blog entry. And every time I change anything. I never want to experience the last few days again. And I don’t want to even think about what shape I would be in without good backups of my file/print and email servers.

Busy business owners should periodically inventory their systems and update what’s critical and what can wait a few days when disaster strikes. I messed up here. I should have realized how important my website has become these past several months, especially since I’m using it to help promote my new book. Fortunately for me, it’s a simple website and I was able to rebuild it by hand. If it had been more complex, well, it scares me to think about it.

Finally – disasters come in many shapes. They don’t have to be fires or tornadoes or terrorist attacks. This disaster would have been a routine hardware failure in other circumstances and will never make even the back pages of any newspaper.

If this post is helpful and you want to discuss planning for your own business continuity, please contact us and I’ll be glad to talk to you. I lived through a disaster. You can too, especially if you plan ahead.

Update from early January, 2015 – I now have a script to automatically backup my website. I tested a restore from bare virtual metal and it worked – I ended up with an identical website copy. And I documented the details.

Update several weeks later. After examining the RAID set in that upper shelf in more detail, I found out it was not RAID 5 with a hot spare as I originally thought. Instead, it was RAID 10, or mirroring with striping. RAID 10 sets perform better than RAID 5 and can stand up to some cases of multiple drive failures, but if the wrong two drives fail, the whole array is dead. That’s what happened in this case. With poor quality 750 GB drives, this setup was an ugly scenario waiting to happen.

0 comments on “Business Continuity and Disaster Recovery; My Apollo 13 week”

6 Pings/Trackbacks for "Business Continuity and Disaster Recovery; My Apollo 13 week"

[…] and chief technology officer (CTO), Greg Scott, of Infrasupport Corporation faced his own Apollo 13 moment after a catastrophic server failure threatened to destroy his company. He says this […]

[…] is no good – see my Infrasupport blog post about how I recovered from my own IT disaster, here.] reduced in size to 50 percent to capture most of the page. Notice the popup message at the bottom […]

[…] Redundancy is almost everywhere in the IT world. Almost, because it’s not generally found in user computers or cell phones, which explains why most people don’t think about it and why these systems break so often. In the back room, nearly all modern servers have at least some redundant components, especially around storage. IT people are all too familiar with the acronym, RAID, which stands for Redundant Array of Independent Disks. Depending on the configuration, RAID sets can tolerate one and sometimes two disk failures and still continue operating. But not always. I lived through one such failure and documented it in a blog post here. […]

[…] posted December 26, 2014 on my Infrasupport website, right here. I copied it here on June 21, 2017 and backdated to match the original […]

[…] Redundancy is almost everywhere in the IT world. Almost, because it’s not generally found in user computers or cell phones, which explains why most people don’t think about it and why these systems break so often. In the back room, nearly all modern servers have at least some redundant components, especially around storage. IT people are all too familiar with the acronym, RAID, which stands for Redundant Array of Independent Disks. Depending on the configuration, RAID sets can tolerate one and sometimes two disk failures and still continue operating. But not always. I lived through one such failure and documented it in a blog post here. […]

[…] is no good – see my Infrasupport blog post about how I recovered from my own IT disaster, here.] reduced in size to 50 percent to capture most of the page. Notice the popup message at the bottom […]